Video Walkthrough

Description :

Google BigQuery is a fully managed, serverless cloud data warehouse that allows users to query large datasets at high speed. It is designed to scale seamlessly to petabytes of data while remaining cost-efficient, making it a powerful solution for transforming big data into actionable insights.

Prerequisites

Before setting up BigQuery as a Data Destination, ensure you have:

- A Google Cloud Project with BigQuery enabled.

- A Google Cloud Service Account with the following roles:

- BigQuery User

- BigQuery Data Editor

- A Service Account Key (JSON file) for authentication.

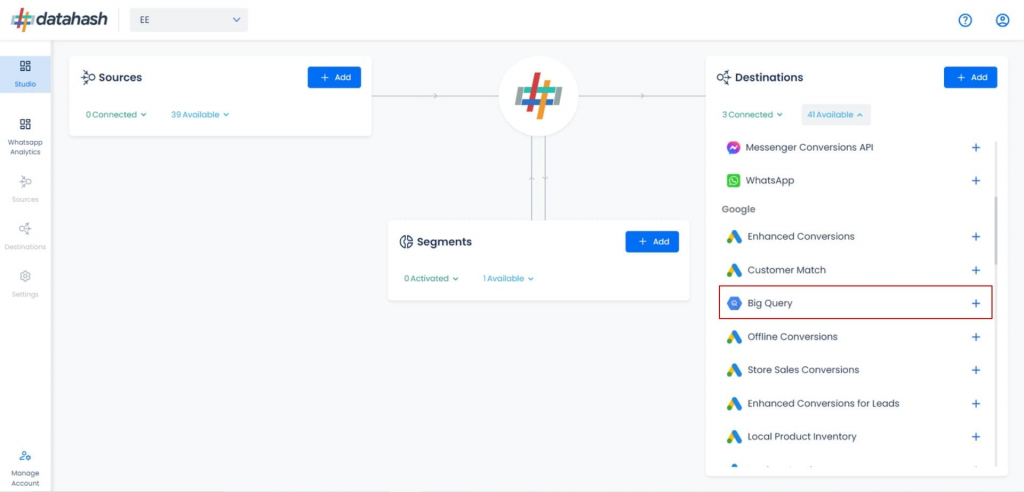

Getting Started:

- Log in to your Datahash account at https://studio.datahash.com/login.

- Navigate to Destinations → Google.

- Click on the BigQuery connector tile.

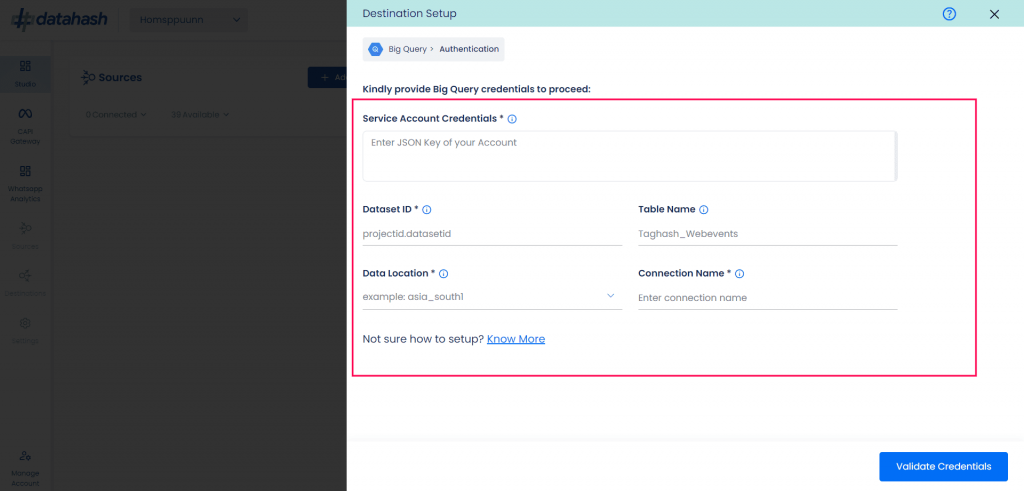

Provide Credentials

Fill in the following required fields (marked with an asterisk in the platform):

- JSON Key → The GCP Service Account credentials JSON file. This enables Datahash to authenticate and load data into your BigQuery instance.

- Create a Service Account in your GCP Project specifically for Datahash use.

- Dataset ID → The unique identifier of the BigQuery dataset where data will be pushed. A dataset is a container that holds tables, views, and metadata.

- Table Name → The name of the table where data will be stored.

- You can provide an existing table name (validated before use).

- If no table exists, Datahash will create one automatically with the given name.

- Always ensure the table name is unique.

- Data Location → The GCP region where your BigQuery dataset is hosted.

Finally, provide a connection name for easy identification in the Datahash dashboard.

Validate Connection

- Click Validate Credentials to confirm that the configuration and permissions are correct.

- If validation is successful, proceed to the next step.

Complete Setup

- Click Finish to complete the setup.

- The new BigQuery connection will now appear in your dashboard under Data Destinations.